Dears

Good Day

Recently, I have been working on a personal project, and I would like to share the implementation details regarding its functionality. I have outlined how it is implemented in a video, and below, I have included the video link for your reference.

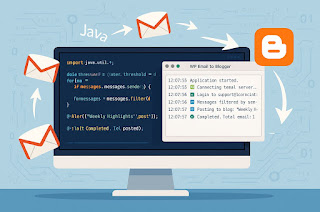

🛠️ Project Update: Automated Email-to-Blogger Integration Using Java & Google API

I'm excited to share a recent project I've successfully implemented — a Java-based automation tool that reads emails from a custom webmail (WordPress-based IMAP server) and posts them as blog entries on Google Blogger, seamlessly and automatically every 24 hours.

📌 Key Highlights of the Solution:

✅ Tech Stack:

Java (Swing for GUI)

Google Blogger API (OAuth 2.0)

Jakarta Mail (IMAP email fetching)

Scheduled task execution (built-in Timer)

Real-time logging/status display via GUI

✅ Functionalities Implemented:

🔐 Authenticates with Gmail Blogger API using OAuth2 credentials

📥 Connects securely to WordPress email (IMAP: mail.iconnectintl.com)

📨 Filters incoming emails from a dynamic list (email_list.txt)

📬 Verifies if emails are addressed to me (To, Cc, or Bcc)

📆 Only processes emails received in the last 24 hours

📝 Automatically publishes valid emails to Blogger as new blog posts

📁 Tracks already processed emails via processed_emails.txt to prevent duplicates

⚙️ Fully automated via internal scheduling — no manual intervention needed

🧩 Displays success/error logs and runtime status in a Swing-based GUI

🚀 Why This Matters:

This tool is particularly useful for:

Content teams managing newsletters or email-driven content workflows

Automation of blog publishing from structured email campaigns

Reducing manual efforts while ensuring timely content updates

🔄 This system can also be extended to:

WordPress REST API for multi-platform publishing

Integration with Gmail, Outlook, or other IMAP-compatible servers

Support rich HTML content parsing and attachments

🙌 A big thanks to the incredible open-source tools and APIs from Google, Jakarta EE, and the Java developer community. This project is a testament to the flexibility and power of Java in building robust automation tools.

📩 Feel free to connect if you're interested in setting up similar automated content pipelines or need help integrating APIs with Java.

https://youtu.be/cjsTGOK8grA

https://sriniedibasics.blogspot.com/

#Java #Automation #APIs #BloggerAPI #JakartaMail #OAuth2 #ContentAutomation #DeveloperTools #OpenSource #SoftwareEngineering #Blogging #Gmail #WordPress #Productivity #JavaDeveloper

.png)